#json metadata

Explore tagged Tumblr posts

Text

least favorite part of job is how they expect me to write bland pull request descriptions for shit that fucking broke me. "ensures the frobnicator tool preserves the new frobnicator metadata fields instead of burying them under a tree by the light of the full moon," i write. "note that google.protobuf.json_format defaults to exporting fields in camelCase rather than snake_case, but bufbuild's generated load-from-json code refuses to even acknowledge the existence of camelCase fields, ignoring them as an Edwardian society lady might disdain a bootblack. this diff may be only ten lines long but the process of creating it has destroyed my will to live. let's all join hands and walk into the bay together so we never have to think about camel case or protobuf oneofs ever again."

and then i backspace all of it and write something normal

63 notes

·

View notes

Text

Here’s the third exciting installment in my series about backing up one Tumblr post that absolutely no one asked for. The previous updates are linked here.

Previously on Tumblr API Hell

Some blogs returned 404 errors. After investigating with Allie's help, it turns out it’s not a sideblog issue — it’s a privacy setting. It pleases me that Tumblr's rickety API respects the word no.

Also, shoutout to the one line of code in my loop that always broke when someone reblogged without tags. Fixed it.

What I got working:

Tags added during reblogs of the post

Any added commentary (what the blog actually wrote)

Full post metadata so I can extract other information later (ie. outside the loop)

New questions I’m trying to answer:

While flailing around in the JSON trying to figure out which blog added which text (because obviously Tumblr’s rickety API doesn’t just tell you), I found that all the good stuff lives in a deeply nested structure called trail. It splits content into HTML chunks — but there’s no guarantee about order, and you have to reconstruct it yourself.

Here’s a stylized diagram of what trail looks like in the JSON list (which gets parsed as a data frame in R):

I started wondering:

Can I use the trail to reconstruct a version tree to see which path through the reblog chain was the most influential for the post?

This would let me ask:

Which version of the post are people reblogging?

Does added commentary increase the chance it gets reblogged again?

Are some blogs “amplifiers” — their version spreads more than others?

It’s worth thinking about these questions now — so I can make sure I’m collecting the right information from Tumblr’s rickety API before I run my R code on a 272K-note post.

Summary

Still backing up one post. Just me, 600+ lines of R code, and Tumblr’s API fighting it out at a Waffle House parking lot. The code’s nearly ready — I’m almost finished testing it on an 800-note post before trying it on my 272K-note Blaze post. Stay tuned… Zero fucks given?

If you give zero fucks about my rickety API series, you can block my data science tag, #a rare data science post, or #tumblr's rickety API. But if we're mutuals then you know how it works here - you get what you get. It's up to you to curate your online experience. XD

#a rare data science post#tumblr's rickety API#fuck you API user#i'll probably make my R code available in github#there's a lot of profanity in the comments#just saying

24 notes

·

View notes

Text

Migrated my google photos to the storage server, all those years of photos, messages, downloads, screenshots amounts to 14GB.

It's really funny how small images can get, especially when they're taken at this level of quality.

although they're not all this potato, here's one of a cat that used to live on the university campus

As a note, your Takeout has metadata in sidecar json files instead of embedded in exif, so you need to fix it, this tool works well:

20 notes

·

View notes

Text

You know what, I'll just ask:

Explanation about the Programming Hell my week has been:

So I started doing a few streams earlier this year reading books aloud. I had a camera on the pages while I read, I'd comment on stuff, used ReactBot a little, at one point a whole 4 people were there.

I've been going through some bad depression moods, and I still want to stream and share books but I dont think I can talk for that long when my mood makes me go nonverbal. I know part of the mood slump is related to not having basically any audience, and I know I won't gain an audience by only streaming once a month or so. So I need to keep streaming even when I'm feeling nonverbal.

The solution I thought of was using TTS (text-to-speech) on books I have saved to my PC, using something like Calibre to read them in the background. There's free to use open source voices, but I wanted to make my own so it still kinda sounded like me and didn't steal someone's likeness. I don't like the idea of making content using someone else's voice, so I looked into making one with my own.

First I looked at the paid programs with good UIs, but not only are they really fucking expensive, they also have hardcoded length limits even on the paid version. I can't get those to read a full book unless I only go at around 30 minutes of reading a month while paying up to $100 monthly to do it, which isn't really an option. I'm on disability, I can't afford that shit.

So I looked at the open source options bc open source means its free. My problem: I can't code to save my life and my experience in the past with programming forums like GitHub and Reddit has been people becoming condescending or hostile when I explain that I have been actively trying to learn to code for 10+ years and it never works. It's interpreted as me not trying or taking "the easy route" because I can't do even the simple stuff. I wish I could. I gave it an honest shot this time, I spent so much fucking time in my command prompt, typing in installs and running python commands, searching errors online to figure out what was going wrong, editing py files and json files and yaml files, learning how to use Audacity to read the metadata of my recordings and make sure they were the right MHz to use in training--and none of it worked. Even when everything I found on horribly formatted GitHub directories SAID it should have been working, it was just a big non functional pile of errors.

So I've given up on making a TTS clone with my voice bc I can't get these "easy codes" to work and the paid ones don't work for what I want or are ridiculously expensive (and mostly still have limits on usage length that mean they wouldnt work).

If anyone reads this and knows how to get these things working properly for cloning--Coqui, Tortoise, Piper, XTTS, any other open source one--reach out and let me know how to do it, because I really tried. Better yet, if you can prove you're trustworthy and are willing to do it, I would love you forever; I can't offer any payment bc I'm on permanent disability and can barely afford things like rent and food, but I would be eternally grateful and willing to draw you stuff and give you a shoutout if that's worth anything to you.

To anyone saying "Just learn to code, you can't give up after a week": This has been an ongoing thing. This is just the latest adventure in "Jasper is failing to learn Python at every turn", along with "why I hate Ren'Py" (that one's been ongoing for years now) and "GitHub has really user-unfriendly layouts" (ever since I got my Graphic Design degree 10 years ago)

#my stream#stream updates#twitch#biggest hurdle i have with reading the books this way is it feels less transformative so ill have to stick to public domain#same reason I'm not using existing audiobooks

3 notes

·

View notes

Text

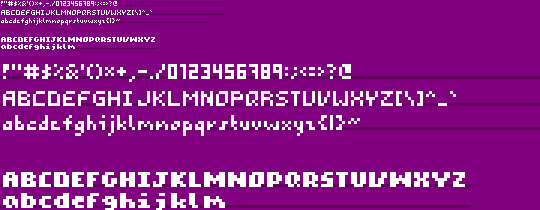

when doing pixel graphics, especially low res pixel graphics, it's not necessarily all that simple and straightforward or even particularly desirable to use the kind of vector fonts typically in use by modern graphical OSes, so instead I'm manually assembling a few bitmap fonts for the purpose.

Current plan is to then write a fairly simple python script to read the resulting .png file(s) along with any necessary metadata via json or something and encode it in a basic binary format - thinking of going with two bits per pixel at the moment, using one for transparency and the other for colour so I can have characters with built in shadow or the like if I want.

Anyway being able to write debug info directly to the graphical window rather than having to use the slower console output would be helpful when writing more advanced blitting and rasterization functions so maybe I can finally make some progress on the actual graphical parts of this thing.

6 notes

·

View notes

Note

Ooh, a few quick shrink ray qs: does the format include any special considerations for transmission of metadata like resolution, pixel aspect ratio, and the like beyond just palette? And given its design for transmission across the network in a single packet, does it have any kinds of bounds checking or checksums, or does it rely on the transport layer to get there reliably? And does the format define a max resolution for either x or y?

The format includes an upscaling factor and resolution, other metadata could definitely be included and I think I'll add an optional json field for immediate extensibility to that end.

The transport layer will have built in checksums but maybe I could add the ability to generate them along Shrink Ray files. I think this would be a good thing to add to the issues of the repo once it's up.

The guidelines recommend sticking to below 256x256px but it's more about (I assume but will check) the gains going down the larger the image size

5 notes

·

View notes

Text

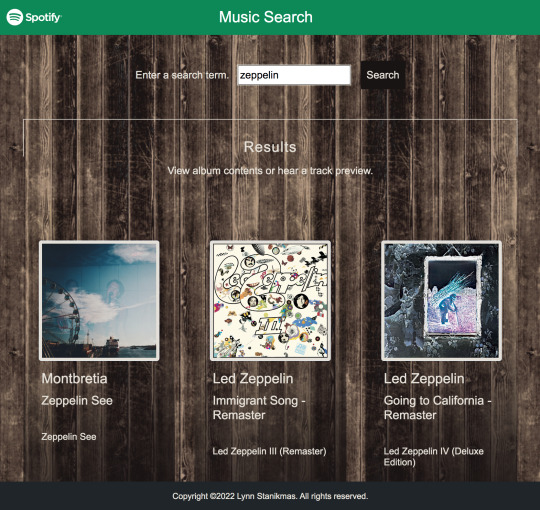

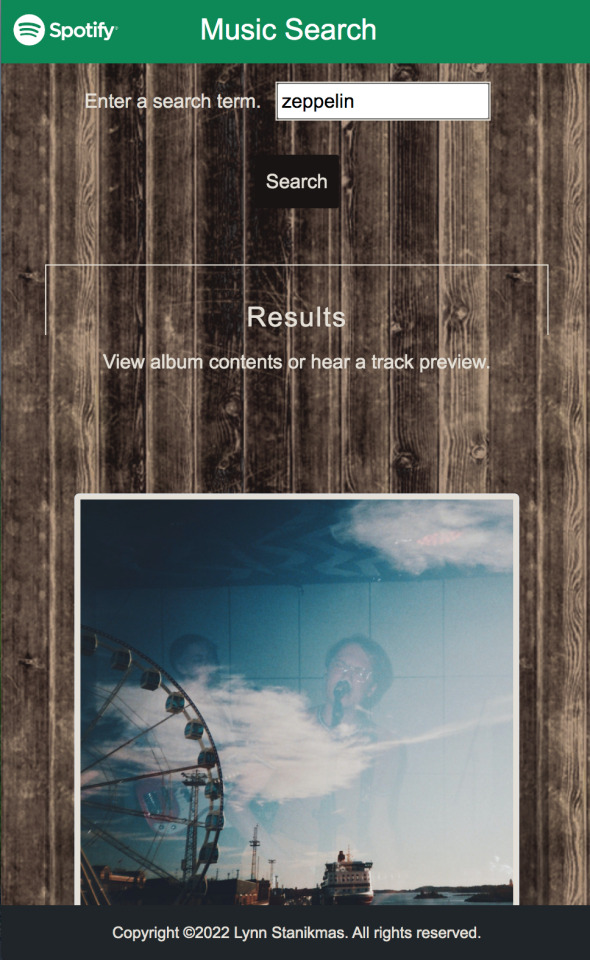

Angular SPA integrated with the Spotify Web API returns JSON metadata about music artists, albums, and tracks, directly from the Spotify Data Catalogue.

#angular#spotify#my music#firebase#firestore#data#database#backend#html5#frontend#coding#responsive web design company#responsivewebsite#responsivedesign#responsive web development#web development#web developers#software development#software#development#information technology#developer#technology#engineering#ui ux development services#ui#ui ux design#uidesign#ux#user interface

2 notes

·

View notes

Text

Audio Editing Process

Hey all, just thought I'd make regarding the process I used to make the new audio for the Northstar Client mod I'm working on.

Really quick here are some helpful sites

First, the software. You'll need to download

Audacity

Northstar Client (NC. Also I'm using the VTOL version)

Steam

Titanfall 2

Legion+ (from same site as NC)

Titanfall.vpk (also from NC)

Visual Studio Code(for making changes to .json files if necessary)

And that should be about it for now. Feel free to use whatever audio program you want. I just audacity cuz it's free and easy to use.

NOTE: if you want to skip a lot of headache and cut out the use of Legion+ and Tfall.vpk you can download a mod from Thunderstore.io and then just replace all the audio files with your new ones

This makes pulling a copy of an original unedited file for changes (if necessary) super easy, as well as making the exported file easy to find

Step 1.

First you'll want to get organized. Create a folder for all your mod assets, existing or otherwise and try to limit any folders within to a depth of one to make them easier to navigate/find stuff.

Example being:

<path>/mod_folder

|-->voicelines_raw (place all your audio in here)

|-->voicelines_edited (export to this folder)

|-->misc (add however many folders you need)

Above is an example of a folder with a depth of 1

First select your audio from the file you created earlier and import it into Audacity

Step 2.

Put any and all audio into their proper folder and open Audacity.

For my project I need to have some voicelines made seperately for when inside the titan, and when outside.

These instances are prefaced with a file tag "auto" and looks like this

diag_gs_titanNorthstar_prime_autoEngageGrunt

Inside "audio" in the mod folder you will see a list of folders followed by .json files. WE ONLY WANT TO CHANGE THE FOLDERS.

Open an audio folder and delete the audio file within, or keep it as a sound reference if you're using Legion+ or Titanfall vpk.

Step. 3

Edit your audio

Click this button and open your audio settings to make sure they're correct. REMINDER 48000hz and on Channel 2 (stereo)

As you can see I forgot to change this one to channel two but as another important note you'll want to change the Project Sample rate with the dropdown and it's pictured and not just the default sample rate.

In this example I needed to make the voiceline sound like it was over a radio and I accomplished that by using the filter and curve EQ. This ended up being a scrapped version of the voiceline so be sure to play around and test things to make sure they sound good to you!

Afterwords your audio may look like this. If you're new, this is bad and it sounds like garbage when it's exported but luckily it's an easy fix!

Navigate to the effects panel and use Clip Fix. play around with this until the audio doesn't sound like crap.

Much better! Audio is still peaking in some sections but now it doesn't like it's being passed through a $10usd mic inside a turbojet engine.

Next you'll need to make sure you remove any metadata as it will cause an ear piercing static noise in-game if left in. Find it in the Edit tab.

Make sure there's nothing in the Value field and you're good to go!

Export your project as a WAV and select the dedicated output folder

Keep your naming convention simple and consistent! If you're making multiple voicelines for a single instance then be sure give them an end tag_01 as demonstrated in the picture above.

Step 4.

You're almost done! Now you can take audio from the export folder and copy+paste it right into the proper folder within the mod. You can delete the original audio from the mod folder at any time.

Also you won't need to make any changes to the .json files either unless you're creating a mod from scratch

NOTE: As of right now i have not resolved the issue of audio only playing out of one ear. I will make an update post about this once I have found a solution. Further research leads me to believe that the mod I am using is missing some file but recreating those is really easy once you know where to look. Hint: it's in the extracted vpk files

#modding#mods#titanfall#titanfall 2#titanfallmoddy#audio editing#northstar client#northstar#vpk#legion+

2 notes

·

View notes

Text

Top Mana Media Marketing Trends You Can’t Ignore in 2025

As a digital marketing agency in Bangalore, Mana Media Marketing is embracing the future of digital marketing, leveraging innovation to help clients thrive. In 2025, agencies and digital marketing companies must master a powerful blend of advanced tools, data-driven strategies, and creative expression across your digital marketing website, social media, and search presence.

1. AI‑Driven Hyper‑Personalization & Chatbots

AI continues to redefine online digital marketing:

Generative AI and machine learning craft real-time, customized email flows, ads, and content for users. According to Deloitte, 75% of consumers prefer personalized experiences, and nearly half of brands embracing personalization exceed revenue goals.

Chatbots using natural language processing mimic human interaction while automating responses 24/7—great for engaging audiences on your digital marketing website and Facebook Messenger.

Mana Media tip: Embed AI chatbots that guide visitors based on their browsing patterns, and use AI to A/B test subject lines and call-to-actions for your digital marketing agency clients.

2. Conversational SEO & Voice‑First Strategy

Voice search is no longer niche—it’s essential:

With smart speaker use surging and voice commerce expanding, optimizing for natural speech queries like “best marketing agency near me” is key

FAQ sections written conversationally boost SEO and capture position-zero snippets.

Mana Media tip: Audit blog and service pages to pepper in local, voice-style keywords (“Find a reliable Digital Marketing agency in Bangalore”). Add FAQ schema to increase voice search visibility.

3. Short‑Form Video & Social Commerce

Snackable, engaging visual content rules:

Platforms like TikTok, Instagram Reels, and YouTube Shorts dominate user attention—and drive sales

Social commerce is booming: consumers can now discover and buy seamlessly via shoppable posts and in-app checkout

Mana Media tip: Create punchy 30–45 second videos for service demos or client results. Use clear CTAs and embed product/service links for direct purchase or signup.

4. AR/VR Immersion

Immersive tech adds “wow” to engagement:

AR/VR let users visualize products and services in real-time, boosting interaction and conversion rates significantly

Brands like IKEA and Sephora use virtual try-ons—driving richer customer experiences

Mana Media tip: Host interactive demos on your digital marketing website—e.g., AR overlays showing infographics on campaign results or virtual tours of client stories.

5. First‑Party & Zero‑Party Data with Privacy

As cookies phase out, user consent matters more:

Collect first- and zero-party data through interactions: quizzes, gated guides, chatbot opt-ins

Emphasize transparency—explain how data will be used for email segmentation, personalization, and tailored marketing and SEO.

Mana Media tip: Offer a free “Local SEO checklist” download in exchange for email, with clear consent. Use GDPR/CCPA-compliant tags to show you respect privacy.

6. Generative Engine Optimization (GEO)

GEO ensures your brand gets cited in AI-generated answers:

This involves using structured metadata, GPT-friendly headers, and content designed for AI content tools

It positions your site to be referenced by AI assistants like ChatGPT, Gemini, and Perplexity.

Mana Media tip: Add AI-optimized headings (“According to Mana Media Marketing…”) and JSON-LD structured data. Update FAQs to conversational queries that align with voice and AI prompts.

7. Ethical, Sustainable, and Purpose‑Driven Marketing

Ethics and purpose build trust:

Consumers, especially younger segments, lean toward brands that take authentic stands and highlight sustainable practices

Transparency in supply chains and eco-focused initiatives boosts loyalty and brand perception.

Mana Media tip: Showcase Bangalore sustainability efforts or charity collaborations on your digital marketing website and campaigns. Include case studies that display real-world impact.

8. Omnichannel & Enhanced Local SEO Presence

Consistency matters across platforms:

A unified brand message across website, email, social, and offline keeps engagement strong .

Optimizing local listings—Google My Business, Bing Places, Bing—drives visibility for searches like marketing agency near me.

Mana Media tip: Create location-specific landing pages—for example, “Digital Marketing agency in Bangalore”—with contact forms, local testimonials, and Google map embeds.

9. Micro‑Influencers & Social Trust

Authentic voices resonate better:

Micro-influencers (5k–50k followers) spark deeper engagement and better ROI via relatable recommendations

Using UGC (user-generated content) builds authenticity and trust.

Mana Media tip: Partner with Bangalore-based micro-influencers to feature your services. Showcase their experiences on your website and social channels for credibility.

10. Interactive & Diverse Content Formats

Keep engagement fresh and dynamic:

Polls, interactive questionnaires, webinars, and live-stream Q&As drive retention and audience connection

Podcasts and video podcasts rank high due to their storytelling power .

Mana Media tip: Host a monthly podcast interviewing local business owners. Promote via email and social, and embed episode snippets on your digital marketing website.

Final Take

The 2025 marketing landscape rewards agility, empathy, and innovation. By integrating AI, voice-first SEO, immersive technology, and purpose-driven storytelling, Mana Media Marketing can lead as a top digital marketing agency, helping businesses through online digital marketing, marketing and SEO, and comprehensive online marketing and advertising strategies.

Position your digital marketing company to tap into hyper-personalization, first-party data, local presence, and emerging tech. Pair it with strong ethical positioning and immersive formats to create brand experiences that connect, convert, and endure.

Let me know if you'd like help creating blog outlines, strategy plans, or content calendars based on these trends!

0 notes

Text

The Role of Structured Data in Web Development: What Agencies Do Differently

In the fast-paced digital landscape, simply having a website isn’t enough. To rank higher in search engines, attract clicks, and offer meaningful experiences to users and search bots alike, modern websites must speak a structured language. This is where structured data plays a crucial role—and why working with a professional Web Development Company can give you a competitive edge.

Structured data helps search engines better understand your site’s content and context. While many businesses overlook its implementation, expert agencies know that structured data is not just an SEO tool—it’s a foundational part of smart, scalable, and future-proof web development.

What Is Structured Data?

Structured data is a standardized format for providing information about a page and classifying its content. It uses schema markup, typically written in JSON-LD format, to describe elements like articles, products, reviews, services, events, and more.

Search engines like Google, Bing, and Yahoo use this data to display rich results—enhanced search listings with star ratings, FAQs, images, price ranges, and other valuable metadata. These visually enriched results often get more attention and better click-through rates than standard listings.

Why Structured Data Matters

While traditional SEO focuses on keywords and backlinks, structured data tells search engines what your content is about in a language they understand. This clarity leads to:

Improved search visibility

Enhanced SERP features (rich snippets)

Higher CTR (Click-Through Rates)

Voice search readiness

Faster content indexing

In short, structured data doesn’t just help search engines find your content—it helps them understand it and rank it better.

What Web Development Agencies Do Differently

Professional web development companies approach structured data with a long-term, technical mindset. Here’s how their approach differs from DIY or freelance efforts:

1. Schema-First Planning from Day One

Most agencies start planning structured data as part of the site architecture and wireframe stage, not as an afterthought. They map key content types (like products, FAQs, articles, or reviews) and match them with relevant schema.org vocabularies. This ensures your website is semantically structured from the ground up.

Why it matters: Early schema planning avoids rework, enhances consistency, and supports future scalability.

2. Custom Schema Markup Based on Business Goals

Instead of using generic schema plugins or templates, professional developers write custom JSON-LD scripts tailored to your niche, services, and customer journey. Whether you're running a local business, SaaS product, or eCommerce store, the structured data is crafted to meet both Google’s guidelines and your conversion objectives.

Why it matters: Accurate, custom schema ensures your content is eligible for the right rich results.

3. Validation and Testing with Schema Tools

A web development company doesn't just implement schema—they validate it thoroughly using Google’s Rich Results Test, Schema Markup Validator, and Search Console reports. This ensures there are no syntax errors, warnings, or mismatched data that could hurt rankings.

Why it matters: Validated schema means fewer indexing issues and improved eligibility for SERP features.

4. Integration with CMS and Dynamic Content

Agencies know how to integrate structured data across CMS platforms like WordPress, Webflow, Shopify, or headless systems. For dynamic pages—such as product listings or blog archives—they automate structured data injection using templates or headless APIs.

Why it matters: Structured data scales seamlessly across your site, even as new content is added.

5. Alignment with Core Web Vitals and Page Experience

Structured data is increasingly intertwined with Google’s broader ranking systems. Agencies ensure that schema is aligned with fast load times, mobile usability, and secure connections—all of which influence your Page Experience Score.

Why it matters: Structured data supports overall site health, not just SEO.

6. Ongoing Updates and Monitoring

Google’s structured data guidelines change frequently. Professional agencies offer ongoing support to update your schema, monitor performance, and adjust implementation based on new opportunities—such as Speakable schema for voice search or ImageObject markup for visual search.

Why it matters: Staying updated ensures long-term visibility in an evolving search ecosystem.

Final Thoughts

Structured data is no longer optional—it’s a key pillar of SEO, user experience, and long-term digital success. While anyone can add a plugin or insert a few tags, doing it strategically and correctly requires deep technical knowledge and experience.

That’s why forward-thinking businesses partner with a Web Development Company that understands structured data from both a technical and business perspective. When implemented correctly, structured data turns your website into a rich, readable, and rewarding experience—for both search engines and your audience.

0 notes

Text

How DC Tech Events works

The main DC Tech Events site is generated by a custom static generator, in three phases:

Loop through the groups directory and download a local copy of every calendar. Groups must have an iCal or RSS feed. For RSS feeds, each item is further scanned for an embedded JSON-LD Event description. I’ll extend that to support other metadata formats, like microformats (and RSS feeds that directly include event data) as I encounter them.

Combine that data with any future single events into a single structured file (YAML).

Generate the website, which is built from a Flask application with Frozen-Flask. This includes two semi-secret URL’s that are used for the newsletter: a simplified version of the calendar, in HTML and plaintext.

Once a day, and anytime a change is merged into the main branch, those three steps are run by Github Actions, and the resulting site is pushed to Github Pages.

The RSS + JSON-LD thing might seem arbitrary, but it exists for a mission critical reason: emoji’s! For meetup.com groups, the iCal feed garbles emojis. It took me some time to realize that my code wasn’t the problem. While digging into it, I discovered that meetup.com event pages include JSON-LD, which preserves emojis. I should probably update the iCal parser to also augment feeds with JSON-LD, but the current system works fine right now.

Anyone who wants to submit a new group or event could submit a Pull Request on the github repo, but most will probably use add.dctech.events. When an event or group is submitted that way, a pull request is created for them (example). The “add” site is an AWS Chalice app.

The newsletter system is also built with Chalice. When someone confirms their email address, it gets saved to a contact list in Amazon’s Simple Email Service(SES). A scheduled task runs every Monday morning that fetches the “secret” URL’s and sends every subscriber their copy of the newsletter. SES also handles generating a unique “unsubscribe” link for each subscriber.

(but, why would anyone unsubscribe?)

0 notes

Text

API Response Formats: What to Expect

SprintVerify returns responses in a standardized JSON format with clear success/failure fields. The response includes metadata, result, and optional masking. Understanding the structure helps in automating your workflows and parsing results smoothly.

0 notes

Text

13 Technical SEO Tips You Need to Implement Right Now

Let’s face it SEO is no longer just about keywords and backlinks. These days, if your site isn’t technically sound, Google won’t even give you a second glance. Whether you're running a blog, eCommerce store, or local business website, technical SEO tips are your backstage passes to visibility, speed, and SERP success.

This isn’t just another generic checklist. We’re diving deep from the technical SEO basics to advanced technical SEO strategies. So buckle up, grab your coffee, and get ready to seriously level up your website.

1. Start with a Crawl See What Google Sees

Before you tweak anything, see what Google sees. Use tools like Ahrefs Technical SEO Guide, Screaming Frog, or Sitebulb to run a site crawl. These will point out:

Broken links

Redirect chains

Missing metadata

Duplicate content

Crawl depth issues

It’s like doing a health check-up before hitting the gym, no use lifting weights with a sprained ankle, right?

2. Fix Crawl Errors and Broken Links Immediately

Crawl errors = blocked search bots = bad news. Head to Google Search Console’s Coverage report and fix:

404 pages

Server errors (500s)

Soft 404s

Redirect loops

Remember: broken links are like potholes on your website’s highway. They stop traffic and damage trust.

3. Optimize Your Site Speed Like It’s 1999

Okay, maybe not that fast, but you get the idea.

Speed isn't just an experience thing, it's a ranking factor. Here’s how to trim the fat:

Compress images (use WebP or AVIF formats)

Enable lazy loading

Use a CDN

Minify CSS, JS, and HTML

Avoid heavy themes or bloated plugins

This is one of the powerful technical SEO guides that Google loves. Faster site = better UX = higher rankings.

4. Make It Mobile-First or Go Home

Google’s all in on mobile-first indexing. If your site looks like a disaster on a smartphone, you’re practically invisible. Ensure:

Responsive design

Readable fonts

Tap-friendly buttons

Zero horizontal scroll

Test it on Google’s Mobile-Friendly Test. Because if mobile users bounce, so does your ranking.

5. Get Your Site Structure Spot-On

Think of your website like a library. If books (pages) aren’t organized, nobody finds what they need. Make sure:

Homepage links to key category pages

Categories link to subpages or blogs

Every page is reachable in 3 clicks max

This clean structure helps search bots crawl everything efficiently a technical SEO basics win.

6. Secure Your Site with HTTPS

Still running HTTP? Yikes. Not only is it a trust-killer, but it’s also a ranking issue. Google confirmed HTTPS is a ranking signal.

Install an SSL certificate, redirect HTTP to HTTPS, and make sure there are no mixed content warnings. You’d be surprised how often folks overlook this simple technical SEO tip.

7. Use Schema Markup for Rich Snippets

Want star ratings, FAQ drops, or breadcrumbs in Google results? Use schema!

Product schema for eCommerce

Article schema for blogs

LocalBusiness schema for service providers

FAQ & How-To schemas for extra real estate in SERPs

Implement via JSON-LD (Google’s favorite) or use plugins like Rank Math or Schema Pro.

8. Eliminate Duplicate Content

Duplicate content confuses search engines. Use tools like Siteliner, Copyscape, or Ahrefs to catch offenders. Then:

Set canonical tags

Use 301 redirects where needed

Consolidate thin content pages

This is especially critical for advanced technical SEO consulting, where multiple domain versions or CMS quirks cause duplicate chaos.

9. Improve Your Internal Linking Game

Internal links spread link equity, guide crawlers, and keep users browsing longer. Nail it by:

Linking from old to new content (and vice versa)

Using descriptive anchor text

Keeping links relevant

Think of internal links as signboards inside your digital shop. They tell people (and bots) where to go next.

10. Don’t Sleep on XML Sitemaps & Robots.txt

Your XML sitemap is a roadmap for bots. Your robots.txt file tells them what to ignore.

Submit sitemap in Google Search Console

Include only indexable pages

Use robots.txt wisely (don’t accidentally block JS or CSS)

Sounds geeky? Maybe. But this combo is one of the advanced technical SEO factors that separates rookies from pros.

11. Check Indexing Status Like a Hawk

Just because a page exists doesn’t mean Google sees it. Go to Google Search Console > Pages > “Why pages aren’t indexed” and investigate.

Watch for:

Noindex tags

Canonicalization conflicts

Blocked by robots.txt

Monitoring indexing status regularly is essential, especially when offering technical SEO services for local businesses that depend on full visibility.

12. Avoid Orphan Pages Like the Plague

Pages with no internal links = orphaned. Bots can’t reach them easily, which means no indexing, no traffic.

Find and fix these by:

Linking them from relevant blogs or service pages

Updating your navigation or sitemap

This is an often-missed on page SEO technique that can bring old pages back to life.

13. Upgrade to Core Web Vitals (Not Just PageSpeed)

It’s not just about speed anymore Google wants smooth sailing. Enter Core Web Vitals:

LCP (Largest Contentful Paint): Measures loading

FID (First Input Delay): Measures interactivity

CLS (Cumulative Layout Shift): Measures stability

Use PageSpeed Insights or Lighthouse to test and fix. It's a must-have if you’re targeting powerful on-page SEO services results.

14. Partner with a Pro (Like Elysian Digital Services)

If your brain’s spinning from all these technical SEO tips, hey, you're not alone. Most business owners don’t have time to deep-dive into audits, schemas, redirects, and robots.txt files.

That’s where pros come in. If you’re looking for advanced technical SEO consulting or even a full stack of on page SEO techniques, Elysian Digital Services is a solid bet. Whether you're a startup or a local biz trying to crack the Google code we've helped tons of businesses get found, fast.

Final Thoughts

There you have 13 technical SEO tips (and a bonus one!) that are too important to ignore. From speeding up your site to fixing crawl issues, each one plays a crucial role in helping your pages rank, convert, and grow.

The web is crowded, the competition’s fierce, and Google isn’t getting any easier to impress. But with the right tools, a bit of tech savvy, and the right support (yep, like Elysian Digital Services), you can absolutely win this game.

#advanced technical seo#advanced technical seo factors#powerful technical seo guides#advanced technical seo consulting#technical seo services for local businesses#ahrefs technical seo guide#powerful on-page seo services

0 notes

Text

Integrate the Objaverse model library

Supplement 3D models by locating a model library on Hugging Face. Merge 160 JSON files (each containing model metadata) for subsequent semantic matching with the vectorized descriptions from news and drama texts.

link:https://huggingface.co/datasets/allenai/objaverse/tree/main

0 notes

Text

Complete On-Page SEO Techniques List for E-Commerce Websites

E-commerce SEO is a different beast than optimizing blogs or service pages. It requires technical precision, strategic planning, and user-first execution to stay ahead of competitors and improve organic performance. In this guide, we’ll cover a complete on page SEO techniques list specifically tailored for e-commerce websites, from category and product page optimization to structured data and mobile UX.

Optimizing Product Titles, Meta Tags, and URLs

One of the most important areas for on-page SEO in e-commerce is optimizing product and category-level metadata. Each product page should have a unique title tag that includes the product name and relevant attributes like size, color, or brand. For example, a well-optimized title might be: "Nike Air Zoom Pegasus 40 Men’s Running Shoes – Black, Size 10".

Similarly, meta descriptions must summarize product benefits, highlight key features, and include persuasive CTAs: "Shop Nike Air Zoom Pegasus 40 – Cushioned men’s running shoes with responsive support. Free shipping available. Order now!". Your product URLs should be clean and keyword-rich (e.g., /mens-shoes/nike-air-zoom-pegasus-40), avoiding dynamic parameters and unnecessary clutter. Well-structured metadata improves click-through rates, relevance, and search visibility.

Schema Markup for Product, Review, and Breadcrumbs

E-commerce websites benefit tremendously from structured data to appear as rich snippets in search results. By adding schema markup for Product, Review, Offer, and Breadcrumb List, you can provide search engines with detailed information about your inventory. This includes price, availability, ratings, and product condition.

For example, applying the Product schema can lead to search results displaying star ratings, price, and "In Stock" tags—dramatically improving visibility and CTR. Use Google’s Structured Data Markup Helper or a plugin like Yoast Woo Commerce SEO to implement JSON-LD markup. Breadcrumb markup improves navigation for both users and bots, allowing search engines to show category paths in SERPs. Schema is not optional—it's a necessity for modern e-commerce SEO.

Category Page Optimization: Titles, Content, and Linking

Category pages often serve as major ranking opportunities for transactional keywords. To optimize them, start with a descriptive H1 that includes the main category keyword. Add 150–300 words of unique content above or below the product listings, explaining what the category includes, top brands, and key benefits.

Internal links should be used to guide users to subcategories, featured products, or relevant blog content. For example, a "Running Shoes" category page could link to "Best Running Shoes for Flat Feet" or "Top 10 Shoes for Marathon Training." Avoid thin content and ensure every category page has custom metadata, alt-texted images, and a clean URL (e.g., /running-shoes/mens). Optimize filters and sorting options for crawlability using canonical tags and parameter handling via Google Search Console.

Image Optimization and Visual Search Readiness

High-quality product images are a core component of e-commerce success, but they must be optimized for speed, SEO, and search discoverability. Start by compressing all images using formats like WebP, AVIF, or JPEG XR without compromising visual quality. Use descriptive file names (black-nike-pegasus-shoe.webp) and alt text (Nike Air Zoom Pegasus 40 in black color, side view).

Include structured image captions, product zoom, and 360-degree views where possible. Mobile users should be able to swipe and view images quickly. Visual search is rising fast—optimized product images are more likely to appear in Google Images, Pinterest, and visual search engines. Add Open Graph and Twitter Card metadata to enhance image sharing previews on social platforms and integrate lazy loading (loading="lazy") for performance gains.

Mobile-First Design and Page Experience Optimization

With over 60% of shopping sessions starting on mobile, your e-commerce site must offer a mobile-first, Core Web Vitals-optimized experience. Responsive design is no longer optional—it’s a search ranking factor. Optimize LCP (Largest Contentful Paint) by minimizing render-blocking scripts and using a CDN. Improve CLS (Cumulative Layout Shift) by predefining media dimensions and reducing ad/script movement.

Implement touch-friendly navigation, large CTA buttons, and sticky headers or carts. Mobile checkout should be seamless with minimal steps and auto-filled fields. Use tools like Google Page Speed Insights, Lighthouse, and Web. dev to test performance. Google’s Page Experience Update heavily rewards mobile-friendly, fast, and stable pages—especially important for high-bounce e-commerce environments.

Internal Linking, Navigation, and Crawl Depth

Internal linking isn’t just for blogs. In e-commerce, it improves crawlability, user discovery, and conversion pathways. Ensure that your navigation includes category-level links, breadcrumbs, and mega menus that follow a logical, crawlable structure. For example, a product page might include links to:

Related items (“Customers also bought”)

Recently viewed items

Upsell or cross-sell products

Category or parent category

Use anchor text that reflects product attributes or categories instead of generic phrases like “click here.” Review crawl depth to ensure that no product is more than three clicks from the homepage. Orphaned pages are a red flag—tools like Screaming Frog, Site bulb, or Ahrefs Site Audit can help identify and fix them. A well-planned internal linking strategy also improves site authority distribution and keeps users engaged longer.

Unique Content and Keyword Optimization for Product Pages

E-commerce sites often suffer from duplicate content due to similar product descriptions, especially across size/color variants or multi-vendor items. To combat this, write unique product descriptions that highlight use cases, features, materials, and value propositions. Avoid copying manufacturer descriptions.

Incorporate primary and secondary keywords naturally throughout the product page—including the title, H1, image alt text, bullet points, and reviews. Include FAQ sections with schema markup to answer common buyer questions, reduce bounce rate, and boost rankings for long-tail queries. UGC (user-generated content) like reviews and Q&As also contribute to content freshness and keyword diversity. Pages with rich, relevant, and original content outperform thin or duplicated pages every time.

Conclusion: Building a Search-Optimized E-Commerce Engine

On-page SEO for e-commerce websites is more than tweaking keywords—it's about crafting a user-friendly, fast, and highly discoverable storefront that speaks to both humans and search engines. From schema and internal linking to image optimization and mobile UX, every technique works together to improve visibility, drive conversions, and reduce dependency on paid traffic.

youtube

By implementing this complete list of on-page SEO techniques, you position your store for long-term success. E-commerce is competitive—but with the right on-page strategy, your products can rise above the noise and connect directly with your ideal customers. Invest in optimization now to build a search-friendly foundation that scales with your business.

0 notes

Text

API Vulnerabilities in Symfony: Common Risks & Fixes

Symfony is one of the most robust PHP frameworks used by enterprises and developers to build scalable and secure web applications. However, like any powerful framework, it’s not immune to security issues—especially when it comes to APIs. In this blog, we’ll explore common API vulnerabilities in Symfony, show real coding examples, and explain how to secure them effectively.

We'll also demonstrate how our Free Website Security Scanner helps identify these vulnerabilities before attackers do.

🚨 Common API Vulnerabilities in Symfony

Let’s dive into the key API vulnerabilities developers often overlook:

1. Improper Input Validation

Failure to sanitize input can lead to injection attacks.

❌ Vulnerable Code:

// src/Controller/ApiController.php public function getUser(Request $request) { $id = $request->query->get('id'); $user = $this->getDoctrine() ->getRepository(User::class) ->find("SELECT * FROM users WHERE id = $id"); return new JsonResponse($user); }

✅ Secure Code with Param Binding:

public function getUser(Request $request) { $id = (int)$request->query->get('id'); $user = $this->getDoctrine() ->getRepository(User::class) ->find($id); return new JsonResponse($user); }

Always validate and sanitize user input, especially IDs and query parameters.

2. Broken Authentication

APIs that don’t properly verify tokens or allow session hijacking are easy targets.

❌ Insecure Token Check:

if ($request->headers->get('Authorization') !== 'Bearer SECRET123') { throw new AccessDeniedHttpException('Unauthorized'); }

✅ Use Symfony’s Built-in Security:

# config/packages/security.yaml firewalls: api: pattern: ^/api/ stateless: true jwt: ~

Implement token validation using LexikJWTAuthenticationBundle to avoid manual and error-prone token checking.

3. Overexposed Data in JSON Responses

Sometimes API responses contain too much information, leading to data leakage.

❌ Unfiltered Response:

return $this->json($user); // Might include password hash or sensitive metadata

✅ Use Serialization Groups:

// src/Entity/User.php use Symfony\Component\Serializer\Annotation\Groups; class User { /** * @Groups("public") */ private $email; /** * @Groups("internal") */ private $password; } // In controller return $this->json($user, 200, [], ['groups' => 'public']);

Serialization groups help you filter sensitive fields based on context.

🛠️ How to Detect Symfony API Vulnerabilities for Free

📸 Screenshot of the Website Vulnerability Scanner tool homepage

Screenshot of the free tools webpage where you can access security assessment tools.

Manual code audits are helpful but time-consuming. You can use our free Website Security Checker to automatically scan for common security flaws including:

Open API endpoints

Broken authentication

Injection flaws

Insecure HTTP headers

🔎 Try it now: https://free.pentesttesting.com/

📸 Screenshot of an actual vulnerability report generated using the tool to check Website Vulnerability

An Example of a vulnerability assessment report generated with our free tool, providing insights into possible vulnerabilities.

✅ Our Web App Penetration Testing Services

For production apps and high-value APIs, we recommend deep testing beyond automated scans.

Our professional Web App Penetration Testing Services at Pentest Testing Corp. include:

Business logic testing

OWASP API Top 10 analysis

Manual exploitation & proof-of-concept

Detailed PDF reports

💼 Learn more: https://www.pentesttesting.com/web-app-penetration-testing-services/

📚 More Articles from Pentest Testing Corp.

For in-depth cybersecurity tips and tutorials, check out our main blog:

🔗 https://www.pentesttesting.com/blog/

Recent articles:

Laravel API Security Best Practices

XSS Mitigation in React Apps

Threat Modeling for SaaS Platforms

📬 Stay Updated: Subscribe to Our Newsletter

Join cybersecurity enthusiasts and professionals who subscribe to our weekly threat updates, tools, and exclusive research:

🔔 Subscribe on LinkedIn: https://www.linkedin.com/build-relation/newsletter-follow?entityUrn=7327563980778995713

💬 Final Thoughts

Symfony is powerful, but with great power comes great responsibility. Developers must understand API security vulnerabilities and patch them proactively. Use automated tools like ours for Website Security check, adopt secure coding practices, and consider penetration testing for maximum protection.

Happy Coding—and stay safe out there!

#cyber security#cybersecurity#data security#pentesting#security#coding#symfony#the security breach show#php#api

1 note

·

View note